Elaina Chai

BS, Double Major in EE and Physics, Massachusetts Institute of Technology, 2012

MSEE, Massachusetts Institute of Technology, 2014

Admitted to Ph.D. Candidacy: 2014-2015

Email: echai AT stanford DOT edu

Research Interests: Deep Neural Network Hardware Acceleration on FPGA's, LSTM Networks, Low energy optimizations, Information Theory/Source Coding

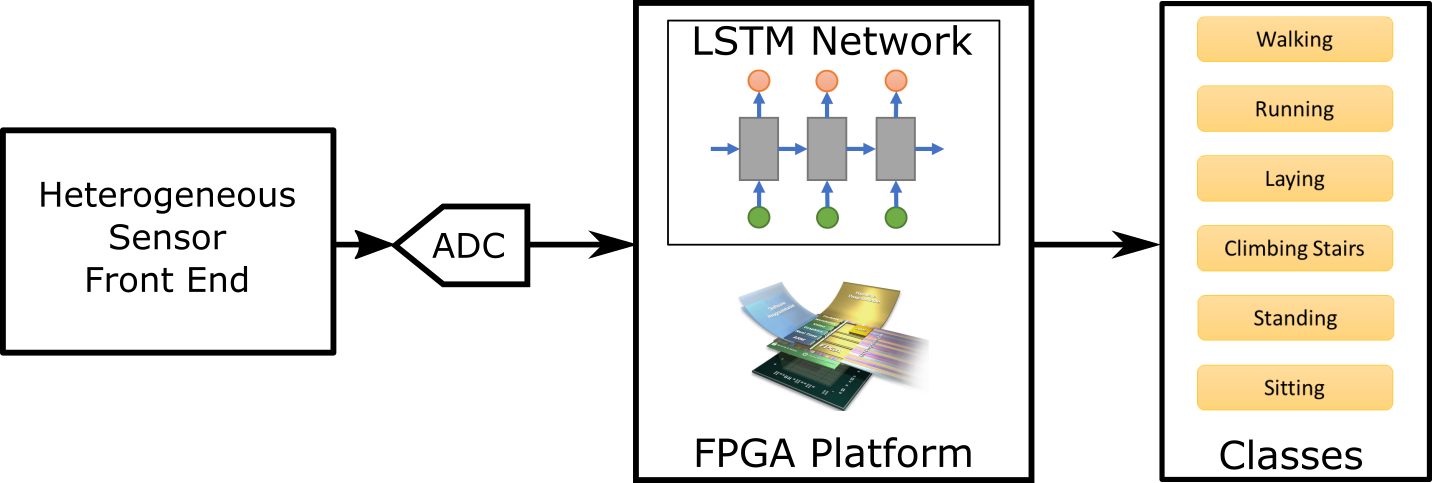

Over the last few years, large increases in dataset sizes and comute resources has fueled the development of deep learning algorithms leading to unprecedented gains in performance in tasks such as object detection and speech recognition. However, these algorithms were primarily designed for server environments, and were trained on highly curated data from a limited range of sensor modalities. Attempts have been made, such as in [1,2] to extend these algorithms such as LSTM Networks to to multi-modal sensor fusion applications such as activity recognition. However, while these efforts have demonstrated success in terms of classficiation error rates, deploying these algorithms in energy constrained embedded platforms still remains a challenge in terms of latency and energy, compared to the more classical SVM-based approaches.

[3] has shown that by rethinking how data is extracted from camara system front-ends, one can better leverage the information in the raw sensor data (typcially lost in the data pre-processing of a conventional system), and as a result, latency and energy consumption is significantly reduced in an machine learning inference system. We are exploring how to extend this approach to the development of the deep learning algorithm in the processing back-end.

Breaking down the barriers across the machine learning system stack, between the raw sensor development and the deep learning back-end, we can better exploit the stochastic properties of deep learning kernels across the entire embedded hardware inference platform, thereby potentially realizing much higher gains in latency and energy metrics, than if any signle part was developed in isolation. The goal of this research is to develop techniques to more optimally combine raw sensor data from multiple modalities with the development of LSTM neural network architectures for use in embedded reconfigurable systems, and to propose a reconfigurable hardware platform for rapid integration, development and validation of these techniques with state-of-the-art LSTM Neural Network back-ends across a wide range of heterogenous sensor front ends.

[1] Shao. H., Jiang, H. Wang, F. Zhao, H. An enhancement deep feature fusion method for rotating machinery fault diagnosis. Knowledge-Based Systems 110 200-220 (2017)

[2] Yao, S., Hu, S., Zhao, Y., Zhang, A., Abdelzaher, T. “Deepsense: A unified deep learning framework for time-series mobile sensing data processing.” Proceedings of the 26th International Conference on World Wide Web. International World Wide Web Conferences Steering Committee, (2017)

[3] Omid-Zohoor, A., Young, C., Ta, C, Murmann, B., “Towards Always-On Mobile Object Detection: Energy vs Performance Tradeoffs for Embedded HOG Feature Extraction” IEEE Trans Circuits Syst. Video Technol. Vol. PP, (2017)